A famous (and controversial) experiment in 1976 by Ekman and Friesen tried to study the facial expressions of individuals in response to certain stimuli. The responses gauged eventually led to the development of the Facial Action Coding System (FACS) which advanced the taxonomy of human emotions. FACS is a cornerstone of modern facial coding applications: after all from photography to computer vision, all facial coding applications mediate their actions through ‘reading’ emotions. These applications also make use of Emotion Artificial Intelligence (AI), a “collect-all term to refer to affective computing techniques, machine learning and Artificial Intelligence” dating back to 1995.

Affective computing techniques have an inherent capacity to measure the emotional state of the subject and as Andrew McStay, Professor of Digital Life at Bangor University, explains, Affective AI specifically “reads and reacts to emotions through text, voice, computer vision and biometric sensing” to “describe and predict behavioural patterns among people.”

Present-day Emotion AI tools make use of enhanced FACSs which track the movement and geometry of human faces. As a result, they can not only identify primary emotions like joy, fear, and contempt, but also have the potential of gauging advanced emotions like depression, frustration, and confusion. It’s no wonder then, that advertising industries globally have taken a shine to emotion AI, to strengthen their marketing and consumer engagement efforts. However, education systems have been closely engaged with this technology too—in fact, way back in 2001, Rosalind Picard sought to develop a computerised system that would aid a student’s learning by monitoring their emotional state. As McStay notes, this was a significant step in the history of ed-tech, as “education systems do not focus sufficiently on how rather than what students learn.”

The evolution of applied emotion AI in the classroom since then has been wide-ranging. Emotion sensing technologies embedded in security cameras installed in Chinese physical classrooms are equipped with AI tools that can take attendance, and track students’ activities while in the room. Increasingly—before COVID-19, at least—Indian schools saw the deployment of AI for ‘security and discipline’ in school, including modern age CCTV cameras using facial recognition technology too.

But beyond policing students, as Picard imagined, emotion AI can also be used to aid their learning. As a result, ed-tech learning platforms across the world have also taken a shine to emotion AI. In India, where the ed-tech market is projected to grow to $1.7 billion by 2022 (a six-fold increase from its 2019 valuation of $265 million), the growing use of emotion AI in homegrown platforms cannot be underestimated. This is especially so given that the new National Education Policy, 2020 (NEP) presses the need to advance core AI research.

However, the unique concern with Emotion AI is that it creates an understanding of emotional life that suits the progression of data analytics, but not the interests of the students using it. The risks range from unwanted attention to behaviour and the body, to Emotion AI’s ability to manipulate the behaviour of students in response to certain conditions and stimuli. In the absence of a Data Protection Bill, as well as research on both the technological and educational implications of the use of Emotion AI in India, unrestricted use could give rise to a form of ‘Emotiveillance’. Sandra Wachter’s idea of “holistic notions of data about people,” or grouping people according to inferred or co-related similarities and characteristics leading to discrimination could become reality, unless novel protections for privacy are developed.

Why Emotion AI May Be Relevant to the Indian Classroom

There are several Indian players either designing Emotion AI, or at the stage of deploying it in educational spaces—Entropik Tech and Mettl, based out of Bengaluru and Gurugram respectively, are two good examples.

“Humanizing AI and adding emotions to consumer experiences,” is the vision of Entropik Tech, touted to be the first emotion AI start-up in India. Entropik uses its Emotion AI technologies to “measure the cognitive and emotional responses of consumers to their content and product experiences,” and raised $8 million as part of its Series A funding as of September, 2020. It plans to utilise these funds to enhance its AI capabilities and provide predictive offerings to its clientele—which range from marketing agencies to educational services. Education is a particular focus for Entropik, with the website clearly promoting how its emotion AI, facial coding, brain mapping, and eye-mapping tools can be used in the classroom to measure cognitive learning and monitor emotions.

How? Entropik’s Facial Coding system consists of two metrics—attention and engagement—in order to measure emotional experience of users. The company has a further 17 patent claims in facial coding—one of them being its flagship product affectlab.io—and a database of 14Mil+ unique facial data points and 18K+ unique emotion data points, collected to train their AI models to draw nuanced inferences.

Gurugram-based Mettl, seeks to enable organisations to make “credible people decisions across (..) talent acquisition, and development.” Among many other services, Mettl also offers AI-based proctoring services, providing an index to test the authenticity of online candidates through voice recognition (enabled through a microphone) and face recognition (enabled through a webcam). These recognition capabilities—that create data points from student’s facial expressions, eyelid or lip movements, gestures, and inflections in speech—flag any suspicious activity which is then reported to the examining body. Mettl has been used across schools —including the Anand Niketan schools and Chirec schools—and by higher education institutions (HEIs) like IIM Bangalore.

Using Emotion AI helps schools and HEIs analyse in-class behaviour, learning patterns, and student engagement, thereby rendering assessment easier and more holistic, which is in line with the goals of the NEP and the COVID-affected education system. But, beyond the early success stories, if deployed extensively in schools, what kind of issues might arise as a result of Emotion AI? Is the Indian legal framework cohesive enough to regulate them?

Reading the Law: The Commodification of Emotions in a Surveillance Society

The goals of Emotion AI powered-companies working in education are not malicious; however, business interests often supercede the best interests and welfare of children. The widespread collection of children’s data creates a stockpile of personal data revealing the inner emotions of a child—this data alongside other educational insights renders children walking ID cards of information. The resulting impact is that the student body becomes a resource to train algorithmic models with and mine data from, in order to forward the business interests of ed-tech solutions.

At a policy-level, integrating such socio-technical systems upon students raises the spectre of the Foucauldian concept of biopolitical governing, which are “strategies rooted in disciplinary practices translated into specific practices (..) governing human lives, such as correction, exclusion, normalization, disciplining and optimization.” As Lippold suggests, this creates profiles of humans to be used by the state in the future whenever it sees fit. Such strategies go against the foundational rights laid out in the Indian Constitution and the UN Convention on the Rights of the Child, that serve the best interests of the child by preventing their exploitation, be it in terms of privacy or freedom of expression, among other concerns.

This is not fear-mongering. Research has shown that patterns of muscle movement and coordination among children and young adults are in the formative stage: thereby, they lack strong reliability as data points. As a result, FACS could be prone to making the wrong conclusions about a student; using Emotion AI might lead to the targeted exclusion of students from exams and attendance lists, or impose wrongful punitive measures. As has already been proven in case of predictive policing, this may lead to an unreasonable classification of students based on imperfect algorithmic systems.

Secondly, if violation of rights is imminent, then the legal protections against manipulations by Emotion AI are ambiguous. In the present Draft Personal Data Protection Bill, 2019 (Draft PDP)—a landmark legislation to protect individual privacy online—‘biometric data’ falls under the definition of Sensitive Personal Data (SPD). There’s a catch, though—biometric data has to be able to uniquely identify a person in order to fall under the heightened protection of the Draft PDP.

But, Emotion AI does not necessarily identify a person—it tracks their emotions. And so, it’s regulation—which only protects the students’ interests—finds no direct mention in the Draft PDP.

The usage of Emotion AI in the case of education leads to targeted disciplinary action and profiling based on faulty systems, violating not only privacy, but bodily integrity, autonomy, self-determination, and personal control too. Clearly, the ‘identifiability’ characteristic of data should not determine protection from privacy-related harms. The ‘datafication’ or ‘commodification’ of a human body, resulting in social sorting and manipulating the subject, deserves legal protection, or at least consideration under the Draft PDP.

You May Also Like: Amidst COVID-19, Who is Watching Over Children’s Data on Ed-Tech Platforms?

This is worrying given the sheer quantities and varieties of student data being collected. For example, both Entropik and Mettl require access to a webcam and face video to carry out their services. In the case of Entropik, even raw brain waves are collected using the AffectLab service to determine cognitive and affective parameters. So is more rudimentary data, such as device and browser data, contact details, user account details, and financial details like credit card numbers. Data generated by users of Mettl is hosted and stored on Amazon Web Services, leading to the aggregation and processing of Personally Identifiable Information (PII) and SPD on a private platform which is known for profiling large scale datasets, and earned Amazon $25 billion in 2018. While the explicit consent of the user to collect all of this information is needed, it is not meaningful consent. Withdrawing consent leads to cancellation of the session—placing the user, in this case, the student trying to complete their education, in a catch-22 situation.

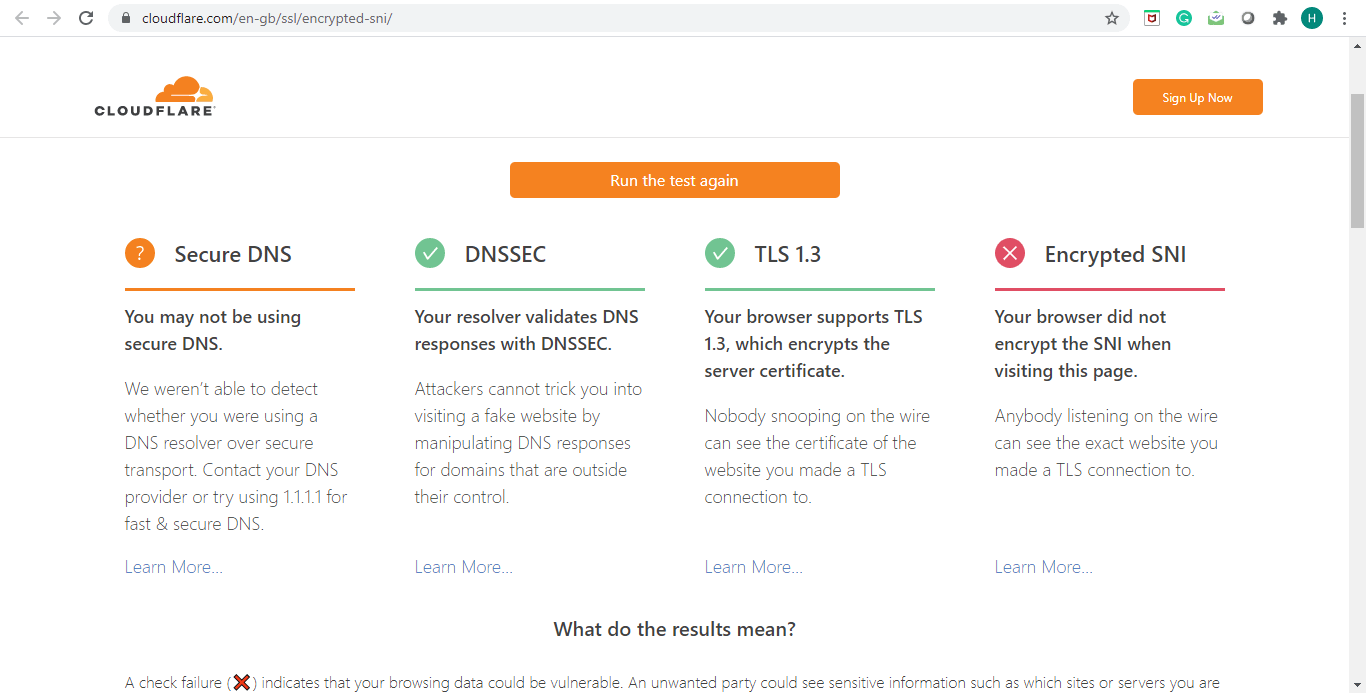

Furthermore, while companies like Mettl are GDPR compliant in that they secure all data and abide by the principles of data minimisation, they also rely on some faulty protocols to encrypt user data. For the encryption of PII or SPD, a security protocol called Transport Layer Security (TLS) 1.2—which we use for sending emails by Gmail too—is enabled on Mettl to support secure transmission.

However, these encryption tools might reveal the algorithm used for encryption—which is what protects our data—which hampers the ‘security’ of data stored and transmitted. TLS 1.2 is also prone to leakage of a user’s metadata—which is like a label that describes the data in hand. If this happens, the length of users’ passwords, their geo-location can be easily fingerprinted, and in some cases, if home locations are leaked in the case of phone-related metadata, users can be re-identified. This could hinder the process of encryption and user protection.

As an alternative, the latest version of TLS, TLS 1.3, could be used—this version encrypts the entire handshake process, from the initial exchange of cryptographic data, to the establishment of an encrypted connection. It also encrypts the Server Name Identification (ESNI), which enhances the privacy and security of the communication. However, countries are presently debating the design vulnerabilities of TLS 1.3, making the global adoption of ESNI slow.

The University Grants Commission (Open and Distance Learning Programmes and Online Programmes) Regulations, 2020 call for stronger privacy regulations, which such ed-tech service providers should adhere to. However, for these guidelines to be meaningfully executed, we need to have stronger legal foundations in place to guide them. In the absence of a secure encryption protocol, as well as a National Encryption Policy or Code of Anonymization, any deployment of Emotion AI in the classroom should be prohibited.

The Road Ahead

The core right at heart of the Draft PDP—in line with the Puttaswamy verdict—is data minimisation, which prevents the processing of data unless necessary and proportionate to the processor’s needs. Emotion AI systems intend to achieve ‘emotional augmentation’—that is, they categorise certain emotions as only elicited in a given context, rendering other emotional responses during a situation invaluable or invalid. This only minimises the emotional range and issues a child may be facing in the classroom—that could be better understood by teachers.

It is reasonable enough to assume that teachers are in the best position to understand the emotions of a child, and deal with them on a case-to-case basis. If teachers have been unable to do so meaningfully so far, then that calls for better sensitization during teacher training to adolescent issues, and increasing the numbers of teachers and counsellors across the country so that more time can be spent with each student.

In a judicial inquiry, it would be difficult to prove that Emotion AI is absolutely necessary for better student-teacher engagement, is in the best interest of a child, and that there is no other alternative to it which is less privacy-invasive. The principle of data minimisation here seems to outshined by a need to ‘engineer’ Indian education in futuristic ways.

You May Also Like: Whither the Teaching Profession? Inspecting Some Silences in the NEP 2020

The introduction of ed-tech during COVID-19 as well as the release of the NEP should not be seen as an opportunity to carry forward the state’s intentions of heightened surveillance in the absence of rigorous data protection laws. For ed-tech to shine, building a responsible AI framework that benefits the users too, as envisaged by Niti Aayog, is necessary To build that, as my colleagues and I have previously outlined, India needs to build stronger legal safeguards around transparency and accountability, trust and fairness, and algorithmic and human rights impact assessments. Without these systems in place, Emotion AI should not be procured, developed, or deployed, least of all for the classroom.

Featured image courtesy of Franki Chamaki on Unsplash. | Views expressed are personal.