In the past few years, it has become common knowledge that Big Tech companies like Facebook, Google, and Amazon rely on the exploitation of user data to offer seemingly free services. These companies typically use business models that rely on third party advertising to profit off this data. In exchange for their services, we hand over our data without much control or choice in the transaction.

In response to the privacy threats posed by such business models, countries around the world have been strengthening and enacting data privacy laws. India is currently debating its own personal data protection law, which is loosely based on the benchmark EU data protection law-the General Data Protection Regulation (GDPR). More recently, attention has shifted to the regulation of non-personal data as well. The Indian Government recently released a report on the Non-Personal Data Governance Framework (NPD Report).

But, why do we need to regulate non-personal data?

While progress on the regulation of personal data is necessary and laudable, in the era of Big Data and machine learning, tech companies no longer need to solely rely on processing our personally identifiable data (personal data) to profile or track users. With newer developments in data analytics, they can find patterns and target us using seemingly innocuous data that may be aggregated or anonymised, but doesn’t need to be identifiable.

For example, they only need to know that I am a brown male in the age range of 25-35, from New Delhi, looking for shoes, and not necessarily my name or my phone number. All of this is “non-personal” data as it’s not linked to my personal identity.

Clearly, tech companies extract value from their service offerings using advanced data analytics and machine learning algorithms which rummage through both personal and non-personal data. This shift to harnessing non-identifiable/anonymised/aggregated data creates a lacuna in the governance of data, as traditionally, data protection laws like the GDPR have focused on identifiable data and giving an individual control over their personal data.

Data privacy is not something that can be effectively regulated at the individual level because it is something akin to air pollution, a public good that requires a collective response. That’s why GDPR in Europe doesn’t work. From a piece I wrote in 2018. https://t.co/OFWAcpJvkS pic.twitter.com/zPo8gHfioi

— zeynep tufekci (@zeynep) August 27, 2020

So, among other economic proposals, the NPD Report proposes a policy framework to regulate such anonymised data, to fill this lacuna. The question now is: how well do its recommendations meet up to the challenges of regulating non-personal data?

How Does The Government Define Non-Personal Data?

The NPD Report proposes the regulation of non-personal data, which it defines as data that is never related to an identifiable person, such as data on weather conditions, or personal (identifiable) data which has been rendered anonymous by applying certain technological techniques (such as data anonymisation). The report also recommends the mandatory cross-sharing of this non-personal data between companies, communities of individuals, and the government. The purpose for which this data may be mandated to be shared falls under three broad buckets: national security, community benefit, and promoting market competition.

However, if such data is not related to an identifiable individual, then how can it be protected under personal data privacy laws?

To address these challenges in part, the report introduces two key concepts: collective privacy and data trusts.

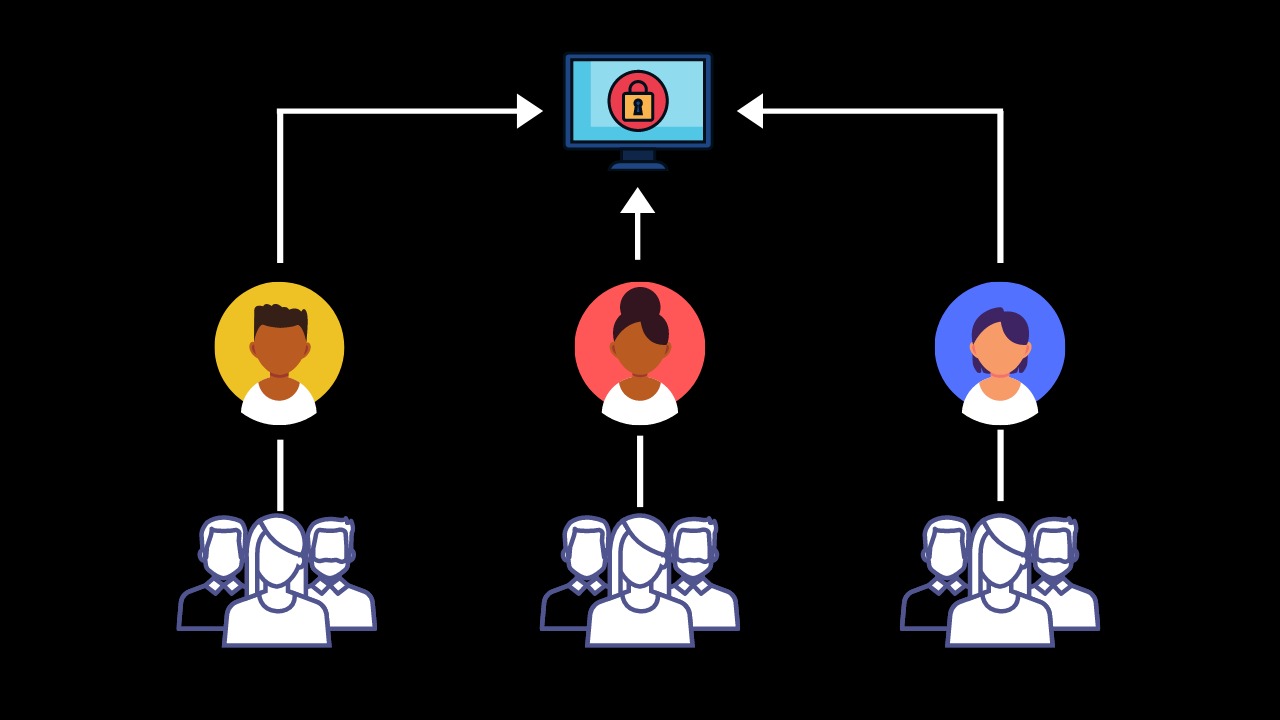

The NPD Report defines collective privacy as a right emanating from a community or group of people that are bound by common interests and purposes. It recommends that communities or a group of people exercise control over their non-personal data-which is distinct from an individual exercising control of their personal data-and do so via an appropriate nominee called a data trustee, who would exercise their privacy rights on behalf of the entire community. These two interconnected concepts of collective privacy and data trusteeship merit deeper exploration, due to their significant impact on how we view privacy rights in the digital age.

What is Collective Privacy and How Shall We Protect It?

The concept of collective privacy shifts the focus from an individual controlling their privacy rights, to a group or a community having data rights as a whole. In the age of Big Data analytics, the NPD Report does well to discuss the risks of collective privacy harms to groups of people or communities. It is essential to look beyond traditional notions of privacy centered around an individual, as Big Data analytical tools rarely focus on individuals, but on drawing insights at the group level, or on “the crowd” of technology users.

In a revealing example from 2013, data processors who accessed New York City’s taxi trip data (including trip dates and times) were able to infer with a degree of accuracy whether a taxi driver was a devout Muslim or not, even though data on the taxi licenses and medallion numbers had been anonymised. Data processors linked pauses in taxi trips with adherence to regularly timed prayer timings to arrive at their conclusion. Such findings and classifications may result in heightened surveillance or discrimination for such groups or communities as a whole.

An example of such a community in the report itself is of people suffering from a socially stigmatised disease who happen to reside in a particular locality in a city. It might be in the interest of such a community to keep details about their ailment and residence private, as even anonymised data pointing to their general whereabouts could lead to harassment and the violation of their privacy.

In such cases, harms arise not specifically to an individual, but to a group or community as a whole. Even if data is anonymised (and rendered completely un-identifiable), insights drawn at a group level help decipher patterns and enable profiling at the macro level.

However, the community suffering from the disease might also see some value in sharing limited, anonymised data on themselves with certain third parties; for example, with experts conducting medical research to find a cure to the disease. Such a group may nominate a data trustee-as envisioned by the NPD Report-who facilitates the exchange of non-personal data on their behalf, and takes their privacy interests into account with relevant data processors.

This model of data trusteeship is thus clearly envisioned as a novel intermediary relationship-distinct from traditional notions of a legal trust or trustee for the management of property-between users and data trustees to facilitate the proper exchange of data, and protect users against privacy harms like large-scale profiling and behavioral manipulation.

But, what makes data trusts unique?

Are Data Trusts the New ‘Mutual Funds’?

Currently, data processors process a wide-range of data-both personal and non-personal-about users, without providing them accessible information about how they use or collect it. These users, if they wish to use services offered by data processors, do not have any negotiating powers over the collection or processing of their data. This results in information asymmetries and power imbalances between both parties, without much recourse to users-especially in terms of non-personal data which is not covered by personal data protection laws like the GDPR, or India’s Draft Personal Data Protection Bill.

You May Also Like: Amidst COVID-19, Who is Watching Over Children’s Data on Ed-Tech Platforms?

Data trusts can help solve the challenges arising during everyday data transactions taking place on the Internet. Acting as experts on behalf of users, they may be in a better position to negotiate for privacy-respecting practices as compared to individual users. By standardising data sharing practices like data anonymisation and demanding transparency in data usage, data trusts may also be better placed to protect collective privacy rights as compared to an unstructured community. One of the first recommendations to establish data trusts in the public fora came from the UK Government’s independent report from 2017, ‘Growing the artificial intelligence industry in the UK’, which recommended the establishment of data trusts for increased access to data for AI systems.

Simply put: data trusts might be akin to mutual fund managers, as they facilitate complex investments on behalf of and in the best interests of their individual investors.

The Fault in Our Data Sarkaar

Since data trusts are still untested at a large scale, certain challenges need to be anticipated at the time of their conceptualisation, which the NPD Report does not take account of.

For example, in some cases, the report suggests that the role of the data trustee could be assumed by an arm of the government. The Ministry of Health and Family Welfare, for instance, could act as a trustee for all data on diabetes for Indian citizens.

However, the government acting as a data trustee raises important questions of conflict of interest-after all, government agencies might utilise relevant non-personal data for the profiling of citizens. The NPD Report doesn’t provide solutions for such challenges.

Additionally, the NPD Report doesn’t clarify the ambiguity in the relationship between data trusts and data trustees, adding to the complexity of its recommendations. While the report envisions data trusts as institutional structures purely for the sharing of given data sets, it defines data trustees as agents of ‘predetermined’ communities who are tasked with protecting their data rights.

Broadly, this is just like how commodities (like stocks or gold) are traded over an exchange (such as data trusts) while agents such as stockbrokers (or data trustees) assist investors in making their investments. This is distinct from how Indian law treats traditional conceptions of trusts and trustees, and might require fresh law for its creation.

In terms of the exchange of non-personal data, possibly both these tasks-that is, facilitating data sharing and protecting data rights of communities/groups-can be delegated to just one entity: data trusts. Individuals who do not form part of any ‘predetermined’ community-and thus may not find themselves represented by an appropriate trustee-may also benefit from such hybrid data trusts for the protection of their data rights.

The Data Empowerment & Protection Architecture will empower individuals with control over how their personal data is used & shared while ensuring that privacy considerations are addressed.

Seeking your comments on the draft document, before 1st Oct

?️ - https://t.co/Rdux9Xs8V7 pic.twitter.com/Urg5pK5wem

— NITI Aayog (@NITIAayog) September 3, 2020

Clearly, multiple cautionary steps need to be in place for data trusts to work, and for the privacy of millions to be protected-steps yet to be fully disclosed in the Report.

Firstly, there is a need for legal and regulatory mechanisms that will ensure that these trusts genuinely represent the best interests of their members. Without a strong alignment with regulatory policies, data trusts might enable the further exploitation of data, rather than bringing about reforms in data governance. Borrowing from traditional laws on trusts, a genuine representation of interests can be ensured by placing a legal obligation-in the form of an enforceable trust deed- on the trust of a fiduciary duty (or duty of care) towards its members.

Secondly, data trusts will require money to operate, and developing funding models that ensure the independence of trusts and also serve their members’ best interests. Various models will need to be tested before implementation, including government funded data trusts and user-subscription based systems.

Thirdly, big questions about the transparency of data trusts remain. As these institutions may be the focal point of data exchange in India, ensuring their independence and accountability will be crucial. Auditing, continuous reviews, and reporting mechanisms will need to be enmeshed in future regulation to ensure the accountability of data trusts.

Privacy Rights Must Be Paramount

As the law tries to keep pace with technology in India, recognising new spheres which require immediate attention, like the challenges of collective privacy, becomes pertinent for policymakers. The NPD Report takes momentous strides in recognising some of these challenges which require swift redressal, but fails to take into consideration emerging scholarship on the autonomy, transparency, and strength of its proposed data trusts.

For example, large data processors will need to be incentivised to engage with data trusts. Smaller businesses may engage with data trusts easily considering the newfound easy access to large amounts of data. But, it might be difficult to incentivise Big Tech companies to engage with such structures, due to their existing stores of wide-scale data on millions of users. This is where the government will need to go back to the drawing board and engage with multiple stakeholders to ensure that innovation goes hand in hand with a privacy respecting data governance framework. Novel solutions like data trusts should be tested with pilot projects, before being baked into formal policy or law.

More than three years after India’s Supreme Court reaffirmed the right to privacy as intrinsic to human existence and a guarantee under the Indian Constitution, government policy continues to treat data-whether personal or non-personal-as a resource to be ‘mined’. In this atmosphere, to meaningfully recognise the right to privacy and self-determination, the government must lay down a data governance framework which seeks to protect the rights of users (or data providers), lays down principles of transparency and accountability, and establishes strong institutions for enforcement of the law.

India’s new digital landscapes require new, flexible regulations—but, how do you develop laws for unchartered terrain? For some answers, click here to read more under ‘Regulating for a Digital Future’, curated by The Bastion and the Centre for Communication Governance, NLU Delhi.

The Bastion is happy to announce a new Technology vertical, where we’ll be covering how the future intersections of tech, policy, and society will affect India’s development journey. To read more of our technology coverage, click here. Interested in writing for us? Click here to read our submissions guidelines.

Views expressed are personal.

[…] You May Also Like: Group Privacy and Data Trusts: A New Frontier for Data Governance? […]

[…] You May Also Like: Group Privacy and Data Trusts: A New Frontier for Data Governance? […]

[…] regulatory framework of NPD with the objective of protecting privacy. Why? One reason for this, as Shashank Mohan has previously noted, is that even NPD can be used to algorithmically profile groups of people by […]